Is Tomorrow’s Embedded-Systems Programming Language Still C?

Is Tomorrow’s Embedded-Systems Programming Language Still C?

What is the best language in which to code your next project? If you are an embedded-system designer, that question has always been a bit silly. You will use, C—or if you are trying to impress management, C disguised as C++. Perhaps a few critical code fragments will be written in assembly language. But according to a recent study by the Barr Group, over 95 percent of embedded-system code today is written in C or C++.

And yet, the world is changing. New coders, new challenges, and new architectures are loosening C’s hold—some would say C’s cold, dead grip—on embedded software. According to one recent study the fastest-growing language for embedded computing is Python, and there are many more candidates in the race as well. These languages still make up a tiny minority of code. But increasingly, the programmer who clings to C/C++ risks sounding like the assembly-code expert of 20 years ago: their way generates faster, more compact, and more reliable code. So why change?

What would drive a skilled programmer to change? What languages could credibly become important in embedded systems? And most important, what issues would a new, multilingual world create? Figure 1 suggests one. Let us explore further.

A Wave of Immigration

One major driving force is the flow of programmers into the embedded world from other pursuits. The most obvious of these is the entry of recent graduates. Not long ago, a recent grad would have been introduced to programming in a C course, and would have done most of her projects in C or C++. Not any more. “Now, the majority of computer science curricula use Python as their introductory language,” observes Intel software engineering manager David

Stewart. It is possible to graduate in Computer Science with significant experience in Python, Ruby, and several scripting languages but without ever having used C in a serious way.

Other influences are growing as well. Use of Android as a platform for connected or user-friendly embedded designs opened the door to Android’s native language, Java. At the other extreme on the complexity scale, hobby developers migrating in through robotics, drones, or similar small projects often come from an Arduino or Raspberry-Pi background. Their experience may be in highly compact, simple program-generator environments or small-footprint languages like B#.

The pervasiveness of talk about the Internet of Things (IoT) is also having an influence, bringing Web developers into the conversation. If the external interface of an embedded system is a RESTful Web presence, they ask, shouldn’t the programming language be JavaScript, or its server-side relative Node.js? Before snickering, C enthusiasts should observe that node.js, a scalable platform heavily used by the likes of PayPal and Walmart in enterprise-scale development, has the fastest-growing ecosystem of any programming language, according to the tracking site modulecounts.com.

The momentum for a choice like Node.js is partly cultural, but also architectural. IoT thinking distributes an embedded system’s tasks between the client side—attached to the real world, and often employing minimal hardware—and, across the Internet, the server side. It is natural for the client side to look like a Web app supported by a hardware-specific library, and for the server side to look like a server app. Thus to a Web programmer, an IoT system looks like an obvious use for JavaScript and Node.js.

The growing complexity of embedded algorithms is another force for change. As simple control-loops give way to Kalman filters, neural networks, and model-based control, high-performance computing languages—here comes Python again, but also languages like Open Computing Language (OpenCL™)—and model-based environments like MATLAB are gaining secure footholds.

Strong Motivations

So why don’t these new folks come in, sit down, and learn C? “The real motivation is developer productivity,” Stewart says. Critics of C have long argued that the language is slow to write, error-prone, subject to unexpected hardware dependencies, and often indecipherable to anyone but the original coder. None of these attributes is a productivity feature, and all militate against the greatest source of productivity gains, design reuse.

In contrast, many more recent languages take steps to promote both rapid learning and effective code reuse. While nearly all languages today owe at lease something to C’s highly-compressed syntax, now the emphasis has swung back to readability rather than minimum character count. And in-line documentation—long seen by C programmers as an example of free speech in which the author is protected against self-incrimination—is in modern languages not only encouraged but often defined by structural conventions. This discipline allows, for example, utility programs that can generate a user’s manual from the structured comments in a Python module.

The modern languages also incorporate higher-level data structures. While it is certainly possible to create any object you want in C++ and to reuse it—if you can remember the clever things you did with the pointers—Python for example provides native List and Dictionary data types. Other languages, such as Ruby, are fundamentally object-oriented, allowing structure and reuse to infuse themselves into programmers’ habits.

Two other important attributes work for ease of reuse in modern languages. One, the more controversial, is dynamic typing. When you use a variable, the interpreter—virtually all these server-side languages are interpreted rather than compiled—determines the current data type of the value you pass to the expression. Then the interpreter selects the appropriate operation for evaluating the expression with that type of data. This relieves the programmer of worry over whether the function he wants to call expects integer or real arguments. But embedded programmers and code reliability experts are quick to point out that dynamic typing is inherently inefficient at run-time and can lead to genuinely weird consequences—intentional or otherwise.

The other attribute is a prejudice toward modularity. It is sometimes said that Python programming is actually not programming at all, but scripting: stringing together calls to functions written in C by someone else.

These attributes—readability, in-line documentation, dynamic typing, and heavy reuse of functions, have catalyzed an explosion of ecosystems in the open-source world. Programmers instinctively look in giant open-source libraries such as npm (for Node.js), PyPI (for Python), or Rubygems.org (for Ruby) to find functions they can use. If they have to modify a module or write a new one, they put their work back into the library. As a result, the libraries thrive: npm currently holds about a quarter-million modules. These massive ecosystems, in turn, appear to profoundly increase the productivity of programmers.

The Downside

With so many benefits, there have to be issues. And the new languages contending for space in embedded computing offer many. There are lots of good reasons why change hasn’t swept the industry yet.

The most obvious problem with most of these languages is that they are interpreted, not compiled. That means a substantial run-time package, including the interpreter itself, its working storage, overhead for dynamic typing, run-time libraries, and so on, has to fit in the embedded system. In principle all this can be quite compact: some Java virtual machines fit into tens of kilobytes. But Node.js, Python, and similar languages from the server side need their space. A Python virtual machine not stripped down below the level of real compatibility is likely to consume several megabytes, before you add your code.

Then there is the matter of performance. Interpreters read each line of code—either the source or pre-digested intermediate-level code—parse it, do run-time checks, and call routines that execute the required operations. This can lead to a lot of activity for a line of code that in C might compile into a couple of machine-language instructions. There will be costs in execution time and energy consumption.

Run-time efficiency is not an impossible obstacle, though. One way to improve it is to use a just-in-time (JiT) compiler. As the name implies, a JiT compiler works in parallel with the interpreter, generating compiled machine instructions for code inside loops, so subsequent traversals will execute faster. “JiT techniques are very interesting,” Stewart says. “The PyPy JiT compiler seems to speed up Python execution by a factor of about two.”

In addition, many functions called by the programs were originally written in C, and are called through a foreign function interface. Heavily-used functions may run at compiled-C speed for the simple reason that they are compiled C code.

There are other ideas being explored as well. For example, if functions are non-blocking or use a signaling mechanism, a program rich in function calls can also be rich in threads, even before applying techniques like loop-unrolling to create more threads. Thus there is the opportunity to apply many multithread cores to a single module—a direction already well-explored in high-performance computing. Going a step further, Ruby permits multithreading within the language itself, so it can produce threaded code even if the underlying operating system doesn’t support threads. And some teams are looking at implementing libraries or modules in hardware accelerators, such as graphic processing units (GPUs), the Xeon Phi, or FPGAs. In fact, the interpreter itself may have tasks suitable for acceleration.

Another difficulty with languages from the server side is the absence of constructs for dealing with the real world. There is no provision for real-time deadlines, or for I/O beyond network and storage in server environments. This shortcoming gets addressed in several ways.

Most obviously, the Android environment encapsulates Java code in an almost hardware-independent abstraction: a virtual machine with graphics, touch screens, audio and video, multiple networks, and physical sensors. For a lighter-weight platform with more emphasis on physical I/O and with the ability to run on even microcontrollers, there is Embedded Java.

Languages like Python require a different approach. Since the CPython interpreter runs on Linux, it can in principle be run on any embedded Linux system with sufficient speed and physical memory. There have been efforts to adapt CPython further by reducing load-time overhead, providing functions for physical I/O access and for using hardware accelerators, and adapting the run-time system to real-time constraints. One example of the latter would be the bare-metal Micro Python environment for STM32 microcontrollers. Implausible as it might seem, similar efforts are underway with the JavaScript engine underneath Node.js.

Security presents further problems. Many safety and reliability standards discourage or ban use of open-source code that has not been formally proven or exhaustively tested. Such restrictions can make module reuse impossible, or so complex as to be only marginally productive. The same level of scrutiny would extend to open-source environments such as the virtual machines. An open-source platform like CPython would at the very least raise red flags all over the reliability-and-safety community.

Finally, given the multitude of driving forces bringing new languages into the embedded world, it is not hard to envision multilingual systems with modules from a number of source languages, each chosen for its access to key libraries or its convenience to a particular developer. Of course you could host several virtual machines on different CPU cores, or unified under a hypervisor on one core, and then you could provide conventions for inter-task messaging through function calls. But the resulting system might be huge.

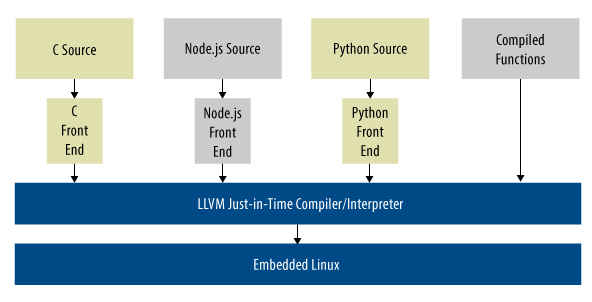

Another possibility might be a set of language-specific interpreters producing common intermediate code for a JiT compiler (Figure 2). Certainly there would still be issues to sort out—such as different inter-task communications models, memory models, and debug environments–but the overhead should be manageable.

Figure 2. A single run-time environment could handle a variety of interpreted languages in an embedded system

If these things are coming, what is a skilled embedded programmer to do? You could explore languages from the Web-programming, server, or even hobbyist worlds. You could try developing a module in both C++ and an interpreted language on your next project. There would be learning-curve time, but the extra effort might count as independent parallel development—a best practice for reliability.

Or you could observe that today the vast majority of embedded code is still written in C. And you are already a skilled C programmer. And C produces better code. All these statements are true. But substitute “assembly language” for “C”, and you have exactly what a previous, now extinct, generation of programmers said, about 20 years ago.

Still, history wouldn’t repeat itself. Would it?

Written by: Ron Wilson Source: http://systemdesign.altera.com/tomorrows-embedded-systems-programming-language-still-c/

Frank La Tella | Aug 26,2023

One interesting embedded language I use is FORTH. Various flavors of FORTH are available for most platforms; PIC micro, Atmel, STM, etc. FORTH is a great way to develop code. Being both a compiled & interpreted language FORTH development takes place on the micro itself (in real time) through a serial connection with your Linux, MAC or windows PC. No more write-compile-upload-test-repeat cycle. Once done you can simply “turnkey” your code to run on micro-controller boot-up. Also being a close to the “metal” language versions of FORTH can give compiled C/C++ a run for its money. All things considered, working in FORTH has all the low-level benefits of C with the rapid development cycle of Python. I’m a heavy Julia developer (I prefer multiple dispatch over OOP!) and use FORTH in my micro-controller work. It’s a breeze to interface the two so, for my work at least, I that has all my bases covered very nicely indeed.

Keep up the great work.

Frank,

from down-under.